IWF Annual Report 2016

Every five minutes we assess a webpage.

Every nine minutes that webpage shows a child being sexually abused.

The IWF Annual Report is designed to be a reference tool to help inform, educate and encourage global action against online child sexual abuse imagery. This is our record of 2016. We are proud of our work.

What's happening to children

What percentage of URLs showed the sexual abuse of children aged 0 to 10, and 11 to 15? Fill the squares in below

Your prediction

0%

0%

Key

Percentage of URLs showing the sexual abuse of children aged

- 0 to 10 years old

- 1%

- 11 to 15 years old

- 1%

*2% represents 16 to 17 year olds, and links or adverts to content IWF already removed

Reality

0%

0%

Key

Percentage of URLs showing the sexual abuse of children aged

- 0 to 10 years old

- 1%

- 11 to 15 years old

- 1%

*2% represents 16 to 17 year olds, and links or adverts to content IWF already removed

"In celebrating its 20th anniversary the IWF's mission is as important as ever in delivering a real world impact against those making and sharing images showing the sexual abuse of children.

It's a sobering fact that every nine minutes, in the course of their work, the IWF's analysts encounter a webpage which shows children being sexually abused. A huge debt of gratitude is owed to these committed individuals who carry out this unenviable task. The IWF brings together law enforcement and industry to facilitate the removal of webpages containing child sexual abuse imagery and this work continues to have a real effect in stopping the re-victimisation of those who have been abused.

This is a global crime which requires a global response and the IWF has been at the centre of a ground-breaking piece of work providing industry with hashes – digital fingerprints - of known abuse images which originate from UK law enforcement's Child Abuse Image Database (CAID).

So far, around 35,000 hashes have been shared leading to webpages containing child sexual abuse imagery being blocked or removed.

The IWF is a critical player in the WePROTECT Global Alliance to end child sexual exploitation online. I think we can all be proud that other countries look to the IWF as a model of good practice for their own reporting hotlines."

Home Secretary, The Rt Hon Amber Rudd MP

Download the reportIWF - the Global Experts

We are the global experts at tackling child sexual abuse images on the internet, wherever they’re hosted in the world. We offer a safe and anonymous place for anyone to report these images and videos to us.

The children in these horrific pictures and videos are real. Knowing their suffering has been captured and shared online can haunt a victim for life. Eliminating these images is our vision.

Through the support of our Members, who are international internet companies, our collaboration with 51 hotlines in 45 countries, and law enforcement partners globally, we excel in finding and removing these images.

Internet companies are being asked to demonstrate their leadership by creating a safer online world. Our Members fund our work and use our unique services to make sure their networks are safe. By working hand in hand with us, they make it harder for criminals to share, host, and sell images of children being sexually abused. They show the world how they do the right thing.

Do the right thing – report online child sexual abuse imagery to www.iwf.org.uk

Mark's ExperienceWelcome from Susie

Our Annual Report gives the latest data on what’s happening globally to tackle child sexual abuse images and videos online. We encourage anyone working in this area to use our statistics to help inform their valuable work.

Our analysts work hard to identify and remove as many webpages showing the sexual abuse of children as possible. You can read about how we look after these real-life superheroes. They’re highly trained and excel at what they do, but this isn’t possible without the support, funding, and generous technological contributions from our membership.

2016 marks the truly global launch of the IWF brand. Our headquarters is in Cambridge, UK, with IWF reporting portals based in 16 countries.

So what are the key trends from 2016?

Every five minutes an analyst assesses a webpage. Every nine minutes that webpage shows a child being sexually abused.

For many years, we’ve seen the majority of child sexual abuse images and videos hosted in North America. It has now shifted to Europe, specifically the Netherlands.

So what are the key trends from 2016?

We’ve also seen criminals increasingly using masking techniques to hide child sexual abuse images and videos on the internet and leaving clues to paedophiles so they can find it. We’ve seen a 112% increase in this technique. We teach others working in this space, such as our colleagues in the Cayman Islands, how to investigate this with great success.

As new website domains were released into the market place, we’ve seen many being purchased and abused to show children being sexually abused – a 258% increase in fact.

So what are the key trends from 2016?

Finally, in 2016, we’ve worked to remove 57,335 webpages containing child sexual abuse images or videos. This is less than we saw in 2015, but this doesn’t mean the problem is going away. The amount of imagery we’ve seen has actually increased as we’re reviewing more individual images than ever before while we build our Image Hash List. This is a list of digital fingerprints of images of children being sexually abused. This is an additional strand of work for our analysts.

In total for 2016, our analysts assessed 293,818 individual images to create 122,972 quality-assessed unique hashes for our list. Our Image Hash List grows daily and we ended the year on a high when it won the CloudHosting Awards Innovation of the Year 2016.

Susie Hargreaves

IWF CEO

1 / 3

Back to startStatistics & Trends

Our Annual Report gives the latest data on what’s happening globally to tackle child sexual abuse images and videos online. We encourage anyone working in this area to use our statistics to help inform their valuable work.

Reports

People report to us at iwf.org.uk, or through one of the 16 portals around the world. All reports come to our headquarters in the UK. If we identify child sexual abuse, we assess the severity of the abuse, the age of the child/children and the location of the files.

Public report vs active searching – what’s the difference?

We actively search the open internet for child sexual abuse images and videos using a combination of our expert analysts’ skills and bespoke webcrawlers. We also take reports from the public. We like to show which reports we actively searched vs received through our website or portals.

Total numbers of reports processed

105,420 reports were processed by IWF analysts in 2016, down 7% from 2015. The vast majority were from webpages. 56% contained criminal content covering all three areas of our wider remit.

Please be aware that not all reports processed are found to contain criminal imagery with our remit.

Child sexual abuse imagery on URLs

57,335 URLs were confirmed as containing child sexual abuse imagery, having links to the imagery, or advertising it.

Child sexual abuse imagery on newsgroups

455 newsgroups were confirmed as containing child sexual abuse imagery.

Wider remit work

• In 2016, 4,031 reports of alleged criminally obscene adult content were made to us. Almost all were not hosted in the UK, so they weren’t in our remit.

• 1 URL depicted criminally obscene adult content hosted in the UK received from a public source.

• 1 URL depicted non-photographic child sexual abuse imagery hosted in the UK received from a public source.

Public report accuracy

55,738 public reports were taken by our Hotline, where the person thought they were reporting child sexual abuse imagery. This includes public reports from all external sources, which includes law enforcement, Members, professionals and the public. 28% of these reports correctly identified child sexual abuse images. This figure includes newsgroups and duplicate reports, where several reports have correctly identified the same child sexual abuse website.

All child sexual abuse URLs analysed by IWF

53% of children were assessed as aged 10 or under. This is consistent with the trend identified in 2015 which indicated a decrease in younger children being depicted in child sexual abuse imagery assessed by our analysts.

In 2016 we saw a further overall drop in the percentage of children we assessed as being aged 10 or younger. In 2013 and 2014, this figure was fairly consistent at around 80%. In 2015, the figure was 69%. We’ve also seen an increase in reports of children we’ve assessed as 11 to 15.

We think there are two reasons why we’ve seen more images of 11 to 15 year olds, than children aged 10 and under.

1. We increasingly see what’s termed “self-produced” content created using webcams and then shared online. This can have serious repercussions for young people and we take this trend very seriously. We’ve looked into this before, and will be launching a new report focusing on online child sexual abuse imagery captured from webcams later in 2017.

2. By analysing our data further, we know that the public are more likely to report images of children aged 10 and younger, and whose abuse is of a more severe level. However, our actively searched-for images total a larger number than public reports. With more analysts and the ability to actively search for content (since 2014), if we encounter a forum with thousands of images of child sexual abuse, we will not only work to remove that forum, but also now capture the URLs of every image on there (which might be pulled from another site) and follow up to remove each and every image hosted on other sites as well. This technique appears to mean we more commonly encounter more images of 11 to 15 year olds.

Domain analysis

For domain analysis purposes, the webpages of www.iwf.org.uk, www.iwf.org.uk/report, www.iwf.org.uk/what-we-do and www.iwf.org.uk/become-a-member/join-us are counted as one domain: iwf.org.uk.

In 2016, 57,335 URLs contained child sexual abuse imagery and these were hosted on 2,416 domains worldwide. This is a 21% increase from 1,991 in 2015.

The 57,335 URLs hosting child sexual abuse content were traced to 50 countries, which is an increase from 48 in 2015. Five top level domains (.com .net .se .io .cc) accounted for 80 per cent of all webpages identified as containing child sexual abuse images and videos.

Abuse of generic top level domains

Our Domain Alerts help our Members in the domain registration sector prevent abuse of their services by criminals attempting to create domains dedicated to distribution of child sexual abuse imagery. Several well-established domains including .com and .biz are known as “Generic Top Level Domains” (gTLDs). Since 2014, many more gTLDs have been released to meet a growing demand for domain names relevant to the web content shown. In 2015, we first saw new gTLDs being used to share child sexual abuse imagery. Many of these new gTLDs were dedicated to illegal imagery and had apparently been registered specifically for this purpose.

The number of websites using a new gTLD, and dedicated to the distribution of child Sexual abuse imagery, continues to be a rising in trend in 2016.

• In 2015, we took action against 436 URLs on 117 websites using new gTLDs.

• In 2016, we took action against 1,559 URLs on 272 websites using new gTLDs – an increase of 258% (153 of these were disguised websites).

Of these 272 websites, 226 were websites dedicated to distributing child sexual abuse content.

Which types of sites are abused the most?

Image hosting sites and cyberlockers were abused significantly more than other services.

Social networks are among the least abused site types.

In 2016, 54,025 URLs (94%) were hosted on a free-to-use service where no payment was required to create an account or upload the content. In the remaining 6% of cases, the content was hosted on a paid-for service, or it wasn’t possible to tell whether the hosting was free or paid for.

Image Hosts

Image hosts are most consistently abused for distributing child sexual abuse imagery.

Offenders distributing child sexual abuse imagery commonly use image hosts to host the images which appear on their dedicated websites, which can often display many thousands of abusive images. Where our analysts see this technique, they ensure that the website is taken down and each of the embedded images is removed from the image hosting service. By taking this two-step action, the image is removed at its source and from all other websites into which it was embedded even if those websites haven’t yet been found by our analysts.

The award-winning IWF Image Hash List, launched in 2016, can help image hosts to tackle this abuse.

Global hosting of child sexual abuse images

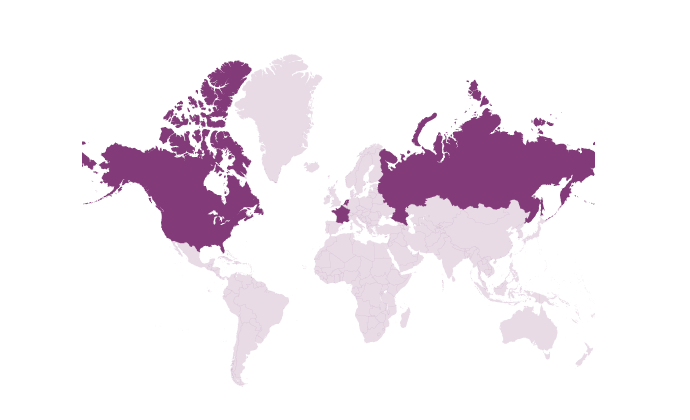

In 2016 the majority of child sexual abuse webpages were hosted in Europe which is a shift from North America. 60% of child sexual abuse content was hosted in Europe, an increase of 19 percentage points. 37% was hosted in North America, a decrease of 20 percentage points. Images and videos hosted in Australasia, South America and in hidden services totalled less than 1% of all confirmed child sexual abuse content in 2016.

Top five countries

92% of all child sexual abuse URLs we identified globally in 2016 were hosted in these five countries.

Hidden services

Hidden services are websites hosted within proxy networks – sometimes also called the "dark web". These websites are challenging as the location of the hosting server can’t be traced in the normal way. We work with the National Crime Agency (NCA) Child Exploitation and Online Protection (CEOP) Command to provide intelligence on any new hidden services which are displaying child sexual abuse imagery. With this intelligence, NCA-CEOP can work with national and international law enforcement agencies to investigate the criminals using these websites.

• Between 2012 and 2015, we saw a year-on-year rise in new hidden services dedicated to child sexual abuse imagery.

• The number of newly identified hidden services declined from 79 in 2015 to 41 in 2016. It is possible this could be the result of increased awareness by law enforcement internationally about hidden services distributing child sexual abuse imagery.

Hidden services commonly contain hundreds or even thousands of links to child sexual abuse imagery that’s hosted on image hosts and cyberlockers on the open web. We take action to remove the child sexual abuse imagery on the open web. Monitoring trends in the way offenders use hidden services to distribute child sexual abuse imagery also helps us when we’re searching for this imagery online.

UK hosting of child sexual abuse imagery – 0.1%

The UK hosts a small volume of online child sexual abuse content – in 2016 this figure was just 0.1% of the global total, down from 0.2% in 2015. When we started in 1996, that figure was 18%.

UK child sexual abuse content removal in minutes

In partnership with the online industry, we work quickly to push for the removal of child sexual abuse content hosted in the UK. The ‘take down’ time clock ticks from the moment we issue a takedown notice to the hosting company to the time the content is removed.

• 60 minutes or less: 52%

• 120 minutes or less: 65%

• More than 120 minutes: 35%

• All UK-hosted content was removed within two days.

We saw an increase in the number of takedown notices which were responded to in two hours or less during 2016. Although the URL numbers are relatively small compared to the global problem, it’s important that the UK remains a hostile place for criminals to host this content.

16 companies’ services in the UK were abused to host child sexual abuse images or videos during 2016. We issued takedown notices to these companies, whether they’re our Members or not.

• 13 companies who were abused weren’t IWF Members.

• 3 companies were IWF Members.

We know that internet companies who’ve joined us as Members are less likely to have their services abused and are quicker at removing child sexual abuse imagery, when needed. We sent 3 takedown notices to IWF Members in 2016 and in each case the content was removed within 120 minutes of the notification. In one case it took just six minutes.

IWF URL List

We provide a list of webpage addresses with child sexual abuse images and videos hosted abroad to companies who want to block or filter them for their users’ protection, and to prevent repeat victimisation. We update the list twice a day, removing and adding URLs.

During 2016:

• The list was sent across all seven continents.

• A total of 53,552 unique URLs were included on the list in 2016.

• On average, 211 new URLs were added each day.

• The list contained an average of 1,951 URLs per day.

Each image can be given a unique code, known as a hash. A hash can be created from any image and it’s like a digital fingerprint. In this case it’s a hash of a child sexual abuse image and it acts as a unique identifier. The list of these hashes can be used to find duplicate images so we can remove these images at source, protect our staff from seeing several of the same image, and protect our Members’ services. For the children in the images, it offers real hope of eliminating the images for good. The Image Hash List could stop the sharing, storage and even the upload of child sexual abuse content.

In 2015, we announced the trial of our Image Hash List which was rolled out to five Member companies providing internet services. During this time (June to October 2015) nearly 19,000 category 'A' child sexual abuse images were added to the list.

In 2016, we made our Image Hash List service available to all our Members. We also worked with Microsoft on a cloud deployment solution, meaning Members get instant access to the service without costly changes to their own networks.

In order to ensure that the Image Hash List contains only child sexual abuse images, it takes real people – our analysts – to review hundreds of thousands of individual images which may, or may not, show children being sexually abused.

During 2016, we assessed 293,818 individual images, of which, we:

• added 122,972 hashes to our Image Hash List.

Of these hashes, 60,821 relate to the worst forms of abuse – images of rape or sexual torture of children.

This means our analysts reviewed 26,711 images each, alongside reviewing public reports, and actively searching for child sexual abuse images and videos.

Keywords List

Did you know that paedophiles create their own language – codes – for finding and hiding child sexual abuse images online?

Each month we give our Members a list of keywords that are used by people looking for child sexual abuse images online. This is to improve the quality of search returns, reduce the abuse of their networks and provide a safer online experience for internet users.

• In December 2016 the keywords list held 442 words associated with child sexual abuse images and videos. It also held 68 words associated with criminally obscene adult content.

Newsgroups

A Newsgroup is an internet discussion group dedicated to a specific subject. Users make posts to a newsgroup and others can see them and comment. Also sometimes called ‘Usenet’, newsgroups were the original online forums and a precursor to the World Wide Web.

Our Hotline team monitors the content of newsgroups and issues takedown notices for individual postings of child sexual abuse imagery. We also provide a Newsgroup Alert to Members, which is a notification of child sexual abuse content hosted on newsgroup services so they can be removed.

We are one of only a handful of hotlines in the world that processes reports on newsgroups.

We actively search for child sexual abuse images in newsgroups.

Throughout 2016, we monitored and reviewed newsgroups and issued takedown notices.

• 629 reports of child sexual abuse images hosted within newsgroups were made to us.

• 2,213 takedown notices were issued for newsgroups containing child sexual abuse images (408 in 2015). That is an increase of 442%. One takedown notice can contain details of several newsgroup postings.

• 60,466 posts were removed from public access. 193% increase on 2015 figure of 20,604.

• After monitoring newsgroups, we recommended our Members don’t carry 305 newsgroups containing child sexual abuse images and videos.

Since 2011, we’ve been monitoring commercial child sexual abuse websites which only display child sexual abuse imagery when accessed by a “digital pathway” of links from other websites. When the pathway is not followed or the website is accessed directly through a browser, legal content is displayed. This means it’s more difficult to find and investigate the illegal imagery.

When we first identified this technique, we developed a way of revealing the illegal imagery, meaning we could remove it, and the websites could be investigated. But the criminals continually change how they hide the illegal imagery, so we adapt in response.

• In 2016, we’ve uncovered 1,572 websites using this method to hide child sexual abuse imagery, an increase of 112% on the 743 disguised websites identified in 2015.

Disguised websites are a significant and increasing problem. By sharing our expertise in uncovering these websites with our sister hotlines and law enforcement worldwide, we help disrupt the operation of commercial child sexual abuse websites.

See how we helped our colleagues in the Cayman Islands when they discovered disguised websites.

Commercial child sexual abuse material

We define commercial child sexual abuse imagery as imagery which was apparently produced or is being used for the purposes of financial gain by the distributor.

Of the 57,335 webpages we confirmed as containing child sexual abuse imagery in 2016, 5,452 (10%) were commercial in nature. This compares to 14,708 (21%) in 2015. That’s a decrease of 62%.

We believe this decrease is due to changes in methods used by commercial distributors to try and prevent being found, particularly by using disguised websites. In 2015, 10,078 of the commercial URLs we actioned were images which were being displayed on separate commercial websites. This year we’ve identified a commercial distribution group that has started to encode their images to prevent us identifying the source host. We took action to have these websites removed and to add these images to our Image Hash List, ensuring our Members can detect these images if they appear anywhere in their networks.

Bitcoin payments for child sexual abuse material

Our Virtual Currency Alerts are sent to Members where we identify Bitcoin wallets associated with child sexual abuse imagery, enabling them to take action to disrupt this activity.

In 2014, we saw Bitcoin being accepted by commercial distributors of child sexual abuse imagery on the open web. This has gradually increased.

• In 2015, we identified 4 websites accepting Bitcoin.

• In 2016, we’ve taken action against 42 websites accepting Bitcoin.

While the number of websites is still low, we are working with Members within the Bitcoin economy to try and keep it that way.

Web brands

Our Website Brands Project started in 2009. Since then, we’ve been tracking the different “brands” of dedicated child sexual abuse websites. These dedicated commercial websites are constantly moving their location to evade detection and our analysts see the same websites appearing on many different URLs over time. Since the project began we’ve identified 2,771 unique website brands.

We analyse:

• hosting patterns,

• registration details, and

• payment information.

We believe the majority of commercial child sexual abuse websites are operated by a small number of criminal groups.

In 2016, the most prolific group accounted for 27% of our total commercial child sexual abuse content reports.

Since 2014, we’ve seen an increase in the number of active "brands" selling child sexual abuse imagery.

• 664 brands seen in 2015.

• 766 active brands in 2016.

We’ve also seen a rise in the number of previously unseen brands:

• In 2015, 427 brands were previously unknown to us.

• In 2016, we saw 573 previously unknown brands, 226 of which were disguised websites.

We monitor trends and work with our financial industry Members to disrupt the commercial distribution of child sexual abuse imagery.

How our analysts spent their time

Our analysts spent most of their time on public and newsgroup reports and on building our Image Hash List.

1 / 18

Back to startOur Members

Select a financial contribution band below to view the associated Members

£1,000+

£2,500+

£5,000+

£10,000+

£15,000+

£20,000+

£25,000+

£50,000+

£75,000+

1 / 9

Back to startChanging the world for the better

We want to give people around the world, who don't have a hotline, a way to report online child sexual abuse imagery. To do this, we're providing countries with a local, customised IWF Portal. This provides a safe and anonymous way to send reports directly to our analysts in the UK. We can then assess the reports and take action to have the content removed.

We've set up portals in 16 countries including India, Uganda, Bermuda and the British Virgin Islands. As well as setting up a portal, we also bring together police, governments, child protection organisations and other key people in these countries to raise awareness of reporting child sexual abuse images.

Contact us at [email protected] to find out more.

Our mission to change history

IWF Portals

English subtitles

Spanish subtitles

Anguilla

Anguilla

"This collaboration with the Internet Watch Foundation is an important component of the Ministry’s National Child Safeguarding Project."

"We look forward to continued partnership as we seek to ensure that children in Anguilla live free from all forms of abuse."

Dr. Bonnie Richardson-Lake

Anguilla’s Permanent Secretary, Health & Social Development

Akrotiri and Dhekelia

Akrotiri and Dhekelia

Ascension Islands

Ascension Islands

"Ascension Island is very much in its infancy of internet use. Working with the IWF and introducing the portal has given the population a helpful and confidential way to report images and sites that are indecent. Through this ongoing partnership, we will continue to raise awareness of safe internet use and how to report sites of concern."

Rob Parfrey

Social Worker. Ascension Island Government.

Bermuda

Bermuda

"Policing a small island jurisdiction 700 miles out to sea from our closest neighbour means that developing partnerships with agencies overseas is key to the success of the Bermuda Police Service. Economies of size and resourcing challenges mean that it would be impossible to set up reporting hotlines here. Our partnership with IWF has helped us set up effective procedures locally and means a safer internet environment for Bermuda’s population. Having an IWF Portal helps us meet our Mission Statement of “Making Bermuda Safer."

Martin P. Weekes

Assistant Commissioner of Police, Crime & Intelligence

British Virgin Islands

British Virgin Islands

"The Royal Virgin Islands Police Force/British Virgin Islands is extremely happy that the Internet Watch Foundation has established a portal in our territory where persons can report cases of indecent images with children.

The destruction of these vulnerable children’s self-esteem is heart breaking and I support all efforts to trace and punish persons responsible for this serious crime.

I hope that more persons in the community would make use of this tool to file reports of images and bring cases to the attention of the authorities. Over time I would like to see less incidents of this nature in our community."

Alwin James

Deputy Commissioner of Police, British Virgin Islands

Cayman Islands

Cayman Islands

"Having the IWF Portal available to the Cayman Islands provides us with a greater ability for all members of the community to be involved in combatting child sexual abuse online. We are very happy that a great number of the community would appear to have taken an interest and visited this portal following on from the media launch, and even made reports to IWF. Also, IWF reporting now forms part of all RCIPS investigations whereby suspicious sites are identified during investigations, which can only enhance any investigation. Our partnership in this way has even lead to two new child sexual exploitation sites being identified, which is very satisfying."

Joanne Delaney

RCIPS Intelligence Analyst, Major Crime

Falklands

Falklands

"The Falkland Islands continues to be a safe and secure environment for children and families and is committed to safeguarding children and young adults. We cannot emphasise enough the importance of the IWF initiative and are completely aligned with their aims and objectives and are extremely grateful of their support and guidance in addressing this very important issue."

"This user-friendly portal, coupled with its anonymity, serves to ensure that we are taking every available means and opportunity to combat child sexual abuse and promote internet safety to those who are most vulnerable in our communities and that these crimes do not go unreported."

Andrew Almond- Bell

Director of Emergency Services & Island Security and Principal Immigration Officer, Falkland Islands Government

Gibraltar

Gibraltar

"The IWF has done an incredible job in identifying and removing a huge number of images and videos of online child sexual abuse over their 20-year life. The RGP and Gibraltar are now part of that history, and our partnership and common mission helps protect victims of this abuse as well as making the internet a safer place."

Wayne Tunbridge

Detective Chief Inspector,

RGP Crime & Protective Services Division

India

India

"The Aarambh India-IWF internet hotline is the first Online Redressal Mechanism of its kind in India. We are a country with a fast changing and growing digital landscape which young people are using to explore pleasure and play. While we lean into this future, we still remain a country where conversations around sex and sexuality with our children remain a taboo. In this context our young people are extremely vulnerable and at risk of being exposed to threats in digital spaces. As the country gears up to fight the menace of child sexual abuse material (CSAM), the hotline and our partnership with IWF has proved to be an invaluable first step which helped us understand the issue and kick start a national movement with the hotline as a rallying point. The hotline has triggered the formation of a National Alliance against Child Sexual Abuse & Exploitation with CSAM being the focus."

Uma Subramanian & Siddharth Pillai

Directors, The Aarambh India Initiative

Mauritius

Mauritius

Montserrat

Montserrat

"Montserrat being a diverse population is open to all walks of life which is a challenge from a policing perspective. Being a part of the IWF Portal it has enabled the Royal Montserrat Police Service in better serving the Montserrat community, as we are now better equipped in safeguarding our youths for the future. The collaborative effort of Royal Montserrat Police Service and the Internet Watch Foundation has enabled the residents of Montserrat who pride ourselves in saying we live on a paradise island."

Courtney Rodney

Inspector, Montserrat Police Service

Pitcairn

Pitcairn

"We are delighted to be working with the Internet Watch Foundation. Pitcairn’s IWF reporting portal makes it simple for anyone on the island to report on images that concern them, and by reporting them, to help to stop child sexual abuse. Pitcairn Island has established robust child safeguarding measures for children living on and visiting our island; our IWF Portal helps us to also protect children worldwide from abuse and exploitation."

Nicola Hebb

Administrator Pitcairn Island

St Helena

St Helena

"St Helena Police Directorate are delighted to be working with the Internet Watch Foundation due to our commitment to safeguard those most vulnerable in our community. This partnership working is extremely relevant as the internet becomes more readily accessible on St Helena. Our ability to prosecute offenders who are sharing and downloading indecent images will be enhanced and it will afford us the ability to identify and protect those children in need. We have a team of qualified and experienced Detectives who are ready to respond to any reports received."

Wendy Tinkler

Detective Chief Inspector

Head of CID & Safeguarding

St Helena Police

Tristan Da Cunha

Tristan Da Cunha

"To the people of Tristan da Cunha having a portal to protect their children while using the internet gives them the peace of mind that they are safe from those who would seek to cause them harm.

It’s important as our children live in a very trusting and protective community that is the remotest in the world, not subjected to the voices of large societies.

Given time we can teach our children the dangers of the internet, and the portal is the first step in helping them prepare to face the realities of living in the wider world."

Conrad J Glass

Inspector of Police

Chairman of the Child Safe Guards Board

Tristan da Cunha

Turks and Caicos

Turks and Caicos

"To have a portal as such where persons can anonymously report incidents of child abuse is an excellent initiative created by the Internet Watch Foundation, and the Turks and Caicos Islands is delighted to be on-board. The user-friendly portal makes it easier for persons who are reluctant to report any knowledge of child abuse as it is critical that these crimes do not go unreported.

Every means necessary to protect our children in the TCI is of high importance and the introduction of the reporting portal is certainly good news and is essential as we move forward to combat online child sexual abuse and promote internet safety."

Mary Durham

Acting Assistant Superintendent

Sexual Offences and Domestic violence unit,

Royal Turks and Caicos Islands Police Force

Uganda

Uganda

"The online child sexual abuse reporting portal helps us actively participate in the noble effort to remove child sexual abusive material from the web, enabling us as a country to contribute to making the internet a safer place for our children to learn, interact and innovate."

James Saaka

Executive Director, National Information Technology Authority, Uganda

Case studies

20 Years of IWF

At 11.21am on 21 October, 1996, the very first report was made to the newly-formed IWF. It came in by telephone, to a small room in a Victorian town house in Oakington, a village just outside Cambridge, UK.

Twenty years later the IWF is an international, world-leader at tackling child sexual abuse images online. We marked our anniversary in October at the BT Tower in London and thanked those who make our work possible.

We spoke to the parents of April Jones, a little girl who was murdered by a man who only three hours previously had been viewing child sexual abuse images online. They, together with key people from some of the most influential internet companies in the world, told us why they believe the IWF’s work is more important than ever.

Explore our interactive timeline to see how we've made a difference over the years.

As a founding company of IWF, BT hosted an event for our 20th anniversary, so we could thank those who make our work possible.

Ernie Allen, WePROTECT International Advisory Board

"I remember 1996 well and the early efforts of police to address the alarming increase of child sexual abuse images on the new internet. It was a daunting and poorly understood problem. However, the creation of the Internet Watch Foundation offered a positive, constructive, innovative solution. Over the past 20 years the IWF has changed the way the UK and the world understand and address this complex problem. It has bridged the gap between law enforcement and the internet industry and created a powerful, effective mechanism for reporting and removing this outrageous content. Twenty years later the IWF is not just a leader in the UK, it is truly a global leader. And it remains a shining example of the power of partnership and collaboration as we continue to attack a problem that is even larger and more difficult today than it was 20 years ago. I congratulate the IWF on its vision, leadership and service over the past 20 years, and look forward to even more in the next 20 years."

UK Safer Internet Centre

The UK Safer Internet Centre is a partnership of three leading organisations: SWGfL, Childnet International and Internet Watch Foundation with one mission - to promote the safe and responsible use of technology for young people.

The partnership was appointed by the European Commission as the Safer Internet Centre for the UK in January 2011 and is one of the 31 Safer Internet Centres of the Insafe network. The centre has three main functions:

Awareness Centre

To provide advice and support to children and young people, parents and carers, schools and the children's workforce and to coordinate Safer Internet Day across the UK.

Helpline

To provide support to professionals working with children and young people with online safety issues.

Hotline

An anonymous and safe place to report and remove online child sexual abuse imagery and videos, wherever they are found in the world.

Safer Internet Day

The UK Safer Internet Centre organises the highly successful Safer Internet Day, led by Childnet International. In 2016, SID messages reached 2 in 5 young people (8-17s) and 1 in 5 parents.

IWF Analysts:

the real-life superheroes

Every five minutes an IWF analyst assesses a webpage. Every nine minutes that webpage shows a child being sexually abused. It’s tough. But someone has to do this work.

Real-life superheroes work in our hotline. They assess the images and videos we search for, and those we receive from the public.

Not many people realise this, but as well as viewing child sexual abuse material, our analysts have to see things which we don’t deal with, such as images of animals being tortured, or even beheadings. If an image circulates the internet, it could be reported. It’s imperative we recruit the right people, and look after them.

So how do we look after our staff?

Heidi Kempster, Director of Business Affairs leads our recruitment and welfare programme.

People who want to be IWF analysts come from all walks of life and span a broad age range. We have a gold standard – and regularly reviewed – welfare system in place to support these exceptional people.

Every analyst applicant has a personal interview with two trained counsellors. This delves into candidates’ personal lives, their views and their support networks. If successful, this is followed up with a ‘normal’ job interview. We then show candidates progressively extreme or criminal content. They can stop the process at any time. After this, we ask them to take time to carefully consider if they can do the job.

Every staff member, whether they work as an analyst or not, undergoes an enhanced criminal background check and we check again every three months.

New analysts go through a specially developed training programme to help them mentally process and cope with exposure to abusive images. During the working day, all analysts take regular, timetabled breaks. Every month, they attend compulsory individual counselling sessions. All employees who see content have an annual full psychological assessment and we offer counselling support to all our employees.

My story - Kate

I used to work as an online moderator for a games company. This job was home-based, which gave me the opportunity to raise my two children while working at the same time.

When my kids were getting older, I decided to get a job outside of home. Basically, I was looking for a job for which I was willing to leave my kids and home for. And then I saw the IWF advertising and I decided to apply.

The job's not easy but it’s probably the most rewarding job I've ever done in my life.

Nothing in the world could’ve prepared me for working in the Hotline. I had no idea what to expect. My mind wouldn’t even let me imagine what I was about to see. We’re like a family in the Hotline, and everyone looks out for one another.

At the beginning, I used to keep my job a secret. When friends asked me about it, I used to say I worked in child safety or something similar to that. I’m so incredibly proud of what I’m doing though, that I’ve started to explain what I do. Some of my friends still avoid asking me about it as they’re scared and don’t understand how I can do such a difficult job. Many ask me if I really need to see content. Most of the time, people are supportive of what I do, even though they say they could never do it themselves.

When I walk through the house door, I'm Mum. I'm not an analyst anymore, just Mum.

My story - Isobel

It's definitely the most worthwhile job I've ever had. When I come to work, I do my job. And I do it well. When I finish work, I close that door and don't think about what I've seen anymore. It's very important to have a buffer between work and my home life. I go to the gym, I do a lot of outdoor exercises and love to do acting in my free time.

There are always images that are harder to view than others. I have difficulties whenever I see images of babies, of new-born babies, being tortured or raped. It gets me thinking - how could anybody do this to a baby, to something that small, that fragile?

The Hotline is a very secure place for us. This is where we look at criminal images, day in and day out, and we remove it, together as a team.

I remember some of my friends asking me if I wished I had pursued another job, especially as I studied something completely different to the job I do now. My answer is always ‘no'. I love my job and I'm happy here. I know that going to work means that I'm going to remove images of child sexual abuse, I'm going to stop people stumbling across these images, I'm going to disrupt paedophiles from posting or sharing these images, and I'm going to stop these young victims being re-victimised over and over again. And for me, that's the biggest thing I could've done. Knowing that when my family and friends, and their family and friends, are online, no one will stumble across these horrendous images. That's why I'm really happy here. Because I really am making a difference.

1 / 4

Back to startThank you for all your support

Every five minutes we assess a webpage. Every nine minutes that webpage shows a child being sexually abused. Together, we can do something about it.

If you want to join some of the world’s biggest and most responsible online brands in protecting your customers while helping us to end child sexual abuse imagery online, see our website www.iwf.org.uk, email [email protected] or call +44 1223 203030

Share this report:

Facebook Tweet Linkedin